Ray tracing has been around a surprisingly long time in computer graphics. It was used to generate images in the 1960s, and by the ’80s new algorithms had been created including path tracing. Yet the “holy grail of rendering” remained a distant dream for early home machines like the ZX Spectrum. You need only look how long this machine takes to render a single ray-traced frame in order to get an idea of how far ray tracing acceleration has come in the past half-century.

It takes around 17 hours for the ZX Spectrum to render a single ray-traced frame. That’s one frame every 61,200 seconds, or 0.000016 fps.

But what a gorgeous frame it is. This is the output from a fun project by Gabriel Gambetta, creator of the Tiny Raytracer program and senior software engineer at Google Zürich, and brought to my attention by Hackaday. As a long-time fan of the ZX Spectrum, Gambetta decided one day to see if the plucky machine from Sir Clive Sinclair born in the early ’80s could cope with ray tracing. The surprising result is that, yes, it sort of can.

As detailed in his blog post on the project, Gambetta ported the basic ray tracing code from his book into BASIC—the programming language that powers the ZX Spectrum. Without too much tweaking, he found that this code produced a basic image even on the ZX Spectrum’s limited hardware—a 3.5MHz Z80A chip with often as little as 16KB of RAM.

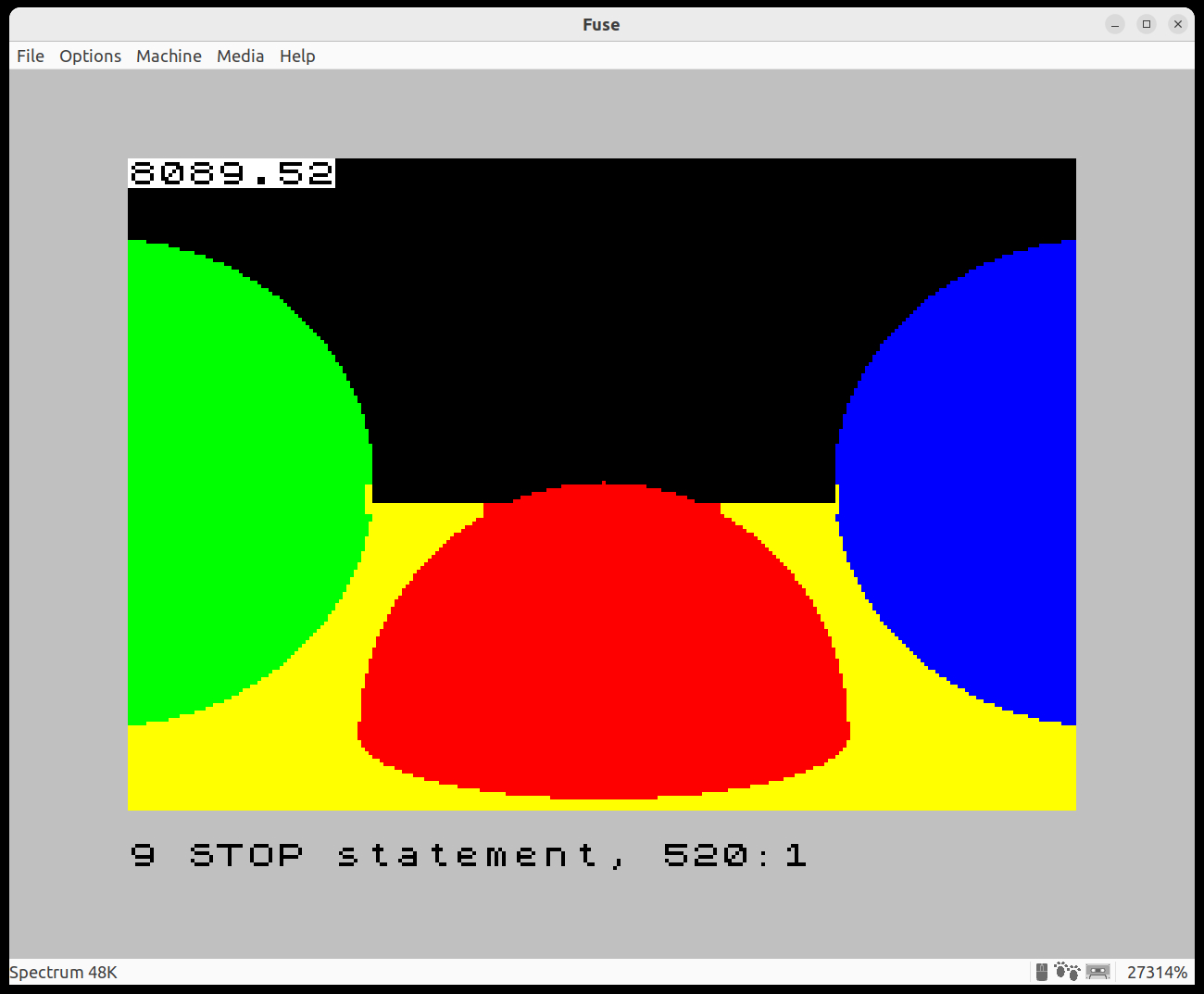

The image is just four blobs of colour: yellow, red, green, and blue. This process took nearly 15 minutes. On a modern PC, the same scene would take a fraction of a second.

“From the beginning I knew implementing some sort of raytracer was possible because the theory and the math are relatively straightforward,” Gambetta tells me. “But I didn’t know if it would run in a sensible amount of time, because this thing was slow for modern standards.”

Ray tracing is computationally expensive. It’s the process of casting a ray out from the camera through a grid of pixels until it interacts with an object in the scene. Casting further rays from that point can generate lighting effects, such as shadows, reflection and refraction. To produce a high resolution image with realistic lighting using even just a single ray per pixel demands millions of rays per frame. It takes clever algorithms, bounding boxes, and heaps of acceleration to do this in real-time for today’s ray-traced games.

Whereas the ZX Spectrum is capable of producing only a handful of colours—fifteen in total, including two brightness levels and black—and has a total resolution of 256 x 192 pixels. It’s unable to display any colour in any pixel at any one time, either. It uses blocks, 8 x 8 pixels in size, in which it’s able to store information of just two colours at once. This was done to minimise memory use; overall a great decision as it hugely brought down costs at a time when computers were not known for being affordable, but a bit of a nightmare for displaying much more than text.

This thing was slow for modern standards.

“The first version of the ZX Spectrum had a grand total of 16 KB of RAM, so memory efficiency was absolutely critical (I had the considerably more luxurious 48 KB model),” Gambetta said in the blog post. “To help save memory, video RAM was split in two blocks: a bitmap block, using one bit per pixel, and an attributes block, using one byte per 8 x 8 block of pixels. The attributes block would assign two colors to that block, called INK (foreground) and PAPER (background).”

The first image produced by Gambetta is effectively an image that’s been coloured in by information from rays cast into the scene, and it’s more or less a match for these 8 x 8 pixel blocks at only 32 x 22 pixels in size—each distinct colour displayed across an entire 8 x 8 block.

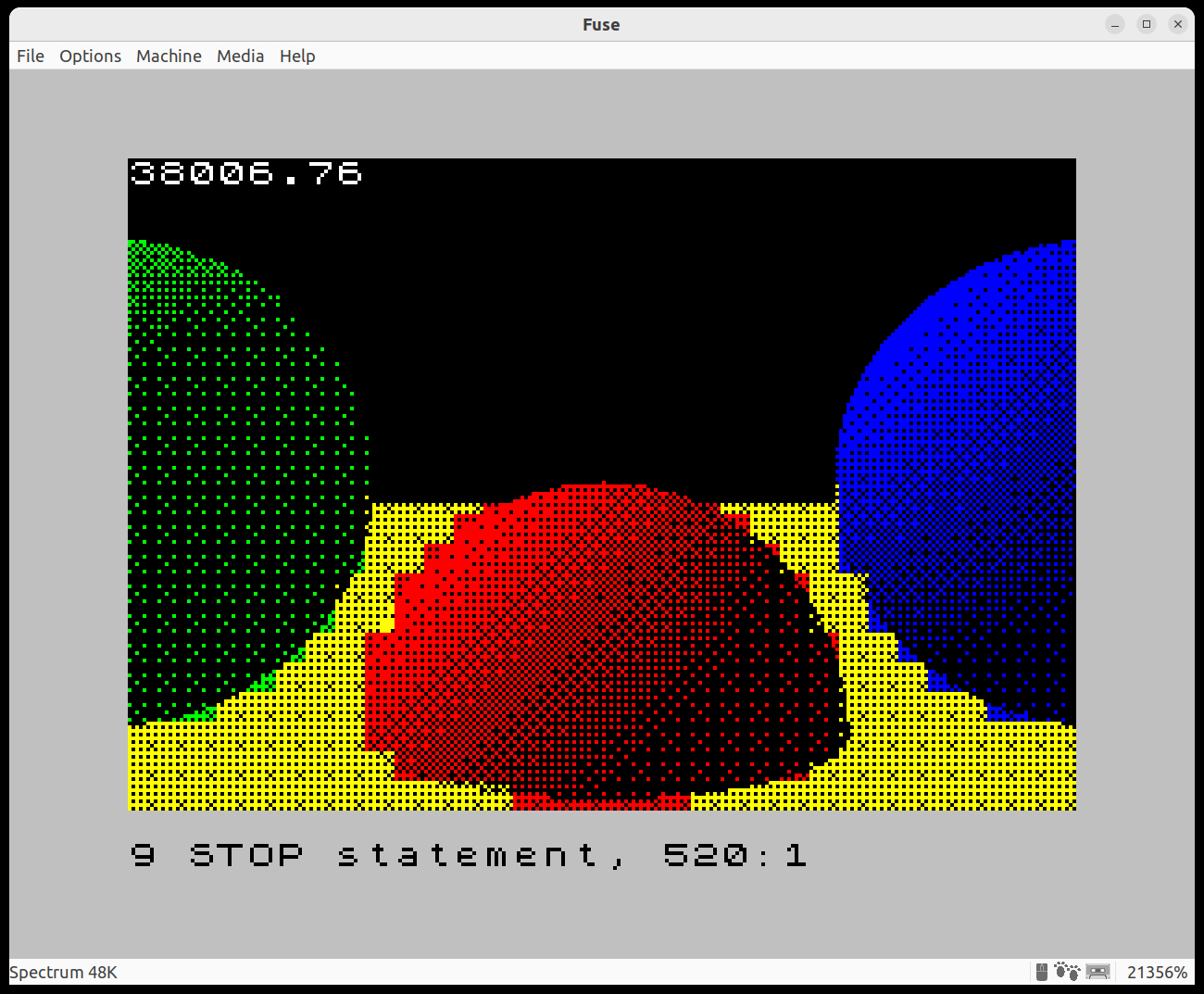

The downside of the ZX Spectrum’s memory-savvy block-based solution is what’s called attribute clash. This is essentially the inability to display any more than two colours within an 8 x 8 pixel block.

Gambetta’s next step was to up the resolution to near-maximum at 256 x 176, effectively drawing individual pixels, but this means bumping into attribute clash.

“Increasing the resolution is easy. Dealing with attribute clash, not so much.”

There’s no solution to attribute clash. It’s an inherent and idiosyncratic part of the ZX Spectrum. In the end, Gambetta’s higher-resolution image is near enough perfect—just don’t look too closely at some of the points where three colours meet.

The strain of going from a 32 x 22 image to a 256 x 176 one is evident in how much longer this secondary image took to render. From 879.75 seconds (nearly 15 minutes) to 61,529.88 seconds (over 17 hours). Luckily, some optimisations and time-saving tweaks meant this could be brought down to 8,089.52, or near-ish two and a half hours.

And we haven’t even got to the really cool bit yet! As I mentioned before, ray tracing can be used to generate the pixel colour, but it can also be used to produce various effects. Think of Alan Wake 2 and how vibrant and realistic some of the lighting is in that game. The ZX Spectrum could never get close—it only has a handful of colours to work with—so instead Gambetta simulates how light interacts with a scene through the use of dithering.

The result is genuinely awesome for a lil’ machine like this. A ray-traced 3D-like image that works somehow despite all the limitations on colours and how they’re used.

“I ran this iteration, and honestly, I stared at it in disbelief for a good minute,” Gambetta said.

What’s more, he uses a further tweak to his ray tracer code to implement shadows in the scene. Using a ray to trace any intersections between a sphere and the directional light source, he was able to produce the final image in this experiment, which is genuinely incredibly impressive. Sure, even with optimisations, it takes around 17 hours to render a single frame, but hey, I’m wholly impressed.

The end result exceeded the expectations I had at the beginning!

Reflections are basically impossible with the limitations on colour blending, and perhaps there’s more to be done to increase performance, but ultimately this is not something I would have previously thought at all possible on a ZX Spectrum’s measly 3.5MHz processor.

“The interesting part of the project was figuring out how to work around all these limitations to make something that ran reasonably fast, if you consider 17 hours per frame to be reasonably fast, and looked reasonably good,” Gambetta tells me.

“In that sense, the end result exceeded the expectations I had at the beginning!”

Why the ZX Spectrum?

The ZX Spectrum was a massively popular computer in the early ’80s. Affordable, entertaining, surprisingly good-looking, and even quite educational. Part of its popularity came down to how it was possible to code your own games using its programming language, BASIC. But one had to get around the limitations of the hardware first.

“To make it so cheap its designer, Sir Clive Sinclair, cut all sorts of corners,” Gambetta tells me. “It was a limited computer even for its time.”

“I grew up with one of these at home (in Uruguay of all places). It had some apps but also tons of videogames, so it was a gateway drug into programming for an entire generation of people like me; you got it for the games, but it was very very easy to accidentally slide into programming it yourself, because it “booted” not into a command line, not into an user interface, but into what we would call an IDE (or development environment) nowadays.”

Learn a bit of BASIC and you could make something on the ZX Spectrum. Not fast, nor with too many colours, but something.

“You could have some kind of visual thing moving around the screen in something like 5 lines of code, which is unthinkable these days.”

Gambetta tells me he doesn’t believe there’s anything quite so simple to dip your toes into the world of graphics programming these days—even the most basic programs take more lines of code than anything the ZX Spectrum ever did. Yet he does plug the PICO-8 for retro gaming fans looking to mess around with getting coding themselves, and of course his own book (available for free on his website or for money on Amazon), Computer Graphics from Scratch.

“That requires nothing but a browser and a text editor, which pretty much any computer comes with out of the box. But even that takes more steps than what we had in the ’80s.”