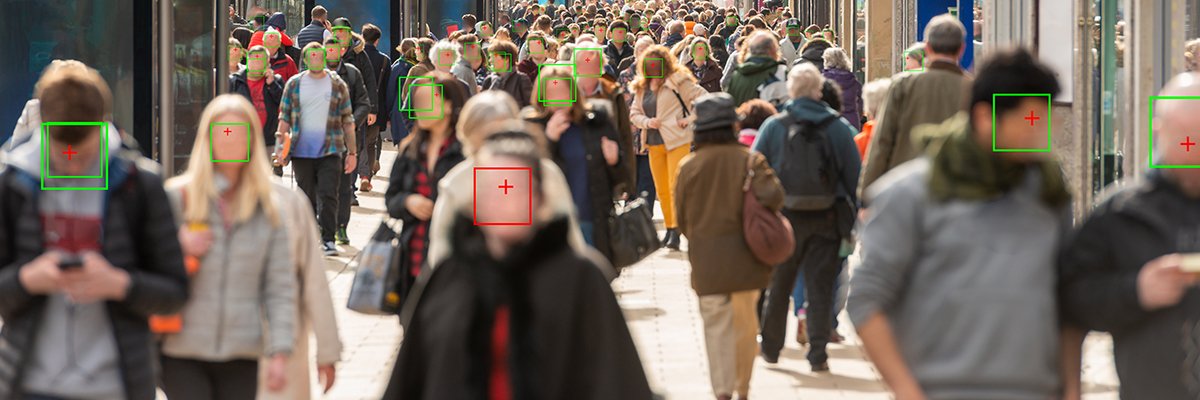

The new Labour government should place an outright ban on artificial intelligence (AI)-powered “predictive policing” and biometric surveillance systems, on the basis they are disproportionately used to target racialised, working class and migrant communities, a civil society coalition has said.

In an open letter to home secretary Yvette Cooper, the #SafetyNotSurveillance coalition has called for an outright ban on the use of predictive policing systems that use AI and algorithms to predict, profile or assess the likelihood of criminal behaviour in specific people or locations.

The coalition – consisting of Open Rights Group (ORG), Big Brother Watch, the Network for Police Monitoring (Netpol) and 14 other human rights-focused organisations – is also calling for an outright ban on biometric surveillance systems like facial recognition, and for all other data-based, automated or AI systems in policing to be regulated to safeguard people from harms and protect their rights.

“AI is rapidly expanding into all areas of public life, but carries particularly high risks to people’s rights, safety and liberty in policing contexts,” the coalition wrote in the letter.

“Many AI systems have been proven to magnify discrimination and inequality. In particular, so-called ‘predictive policing’ and biometric surveillance systems are disproportionately used to target marginalised groups including racialised, working class and migrant communities. These systems criminalise people and infringe human rights, including the fundamental right to be presumed innocent.”

To protect people’s rights and prevent uses of AI that exacerbate structural power imbalances, the coalition is calling on the government to completely prohibit the use of predictive policing and biometric surveillance, while making all other systems that influence, inform or impact policing decisions subject to strict transparency and accountability obligations.

These obligations, the group added, should be underpinned by a new legislative framework that provides “consistent public transparency” around the systems being deployed; reduces data sharing between public authorities, law enforcement and the private sector; places accessibility requirements on AI suppliers; and ensures there is meaningful human involvement in and review of automated decisions.

The coalition further added that any new legislation must also ensure there is mandatory engagement with affected communities, give people the right to a written decision by a human explaining the automated outcome, and provide clear routes to redress for both groups and individuals. Any redress mechanisms must also include support for whistleblowers, it said.

AI and automated systems have been proven to magnify discrimination and inequality in policing. Without strong regulation, police will continue to use AI systems which infringe our rights and exacerbate structural power imbalances, while big tech companies profit

Sara Chitseko, Open Rights Group

“AI and automated systems have been proven to magnify discrimination and inequality in policing,” said Sara Chitseko, pre-crime programme manager for ORG. “Without strong regulation, police will continue to use AI systems which infringe our rights and exacerbate structural power imbalances, while big tech companies profit.”

The UK government previously said in the King’s Speech that it would “seek to establish the appropriate legislation to place requirements on those working to develop the most powerful artificial intelligence models”. However, there are currently no plans for AI-specific legislation, as the only mention of AI in the background briefing to the speech is as part of a Product Safety and Metrology Bill, which aims to respond “to new product risks and opportunities to enable the UK to keep pace with technological advances, such as AI”.

The preceding government said while it would not rush to legislate on AI, binding requirements would be needed for the most powerful systems, as voluntary measures for AI companies would likely be “incommensurate to the risk” presented by the most advanced capabilities.

Computer Weekly contacted the Home Office for comment but was told it is still considering its response.

Ongoing police tech concerns

In November 2023, the outgoing biometrics and surveillance camera commissioner for England and Wales, Fraser Sampson, questioned the crime prevention capabilities of facial recognition, arguing that the authorities were largely relying on its chilling effect, rather than its actual effectiveness in identifying wanted individuals.

He also warned of generally poor oversight over the use of biometric technologies by police, adding there are real dangers of the UK slipping into an “all-encompassing” surveillance state if concerns about these powerful technologies aren’t heeded.

Sampson also previously warned in February 2023 about UK policing’s general “culture of retention” around biometric data, telling Parliament’s Joint Committee on Human Rights (JCHR) that the default among police forces was to hang onto biometric information, regardless of whether it was legally allowed.

He specifically highlighted the ongoing and unlawful retention of millions of custody images of people never charged with a crime, noting that although the High Court ruled in 2012 that these images must be deleted, the Home Office – which owns most of the biometric databases used by UK police – said it can’t be done because the database they are held on has no bulk deletion capability.

A prior House of Lords inquiry into UK policing’s use of advanced algorithmic technologies – which explored the use of facial recognition and various crime prediction tools – also found in March 2022 that these tools pose “a real and current risk to human rights and to the rule of law. Unless this is acknowledged and addressed, the potential benefits of using advanced technologies may be outweighed by the harm that will occur and the distrust it will create.”

In the case of “predictive policing” technologies, Lords noted their tendency to produce a “vicious circle” and “entrench pre-existing patterns of discrimination” because they direct police patrols to low-income, already over-policed areas based on historic arrest data.

On facial recognition, they added it could have a chilling effect on protest, undermine privacy, and lead to discriminatory outcomes.

After a short follow-up investigation looking specifically at facial recognition, Lords noted UK police were expanding their use of facial recognition technology without proper scrutiny or accountability, despite lacking a clear legal basis for their deployments. They also found there were no rigorous standards or systems of regulation in place to control forces’ use of the technology.