Remember the RTX 3080? Of course you do. While it’s easy to get caught up in the latest 40-series GPU releases, the RTX 3080 still makes for a great gaming card. It wasn’t particularly heat-prone, yet some of the third-party cards came with very large coolers to push it to the maximum. None, however, is going to beat the sheer size of the passive cooling solution created here, as 10 (!) CPU heatsinks bolted to a gigantic block of copper on the board turns a plain looking GPU into something of an event.

Reddit user Everynametaken9 took to the platform to show off the rough prototype of their latest creation, and ask for advice as to where to take the design next.

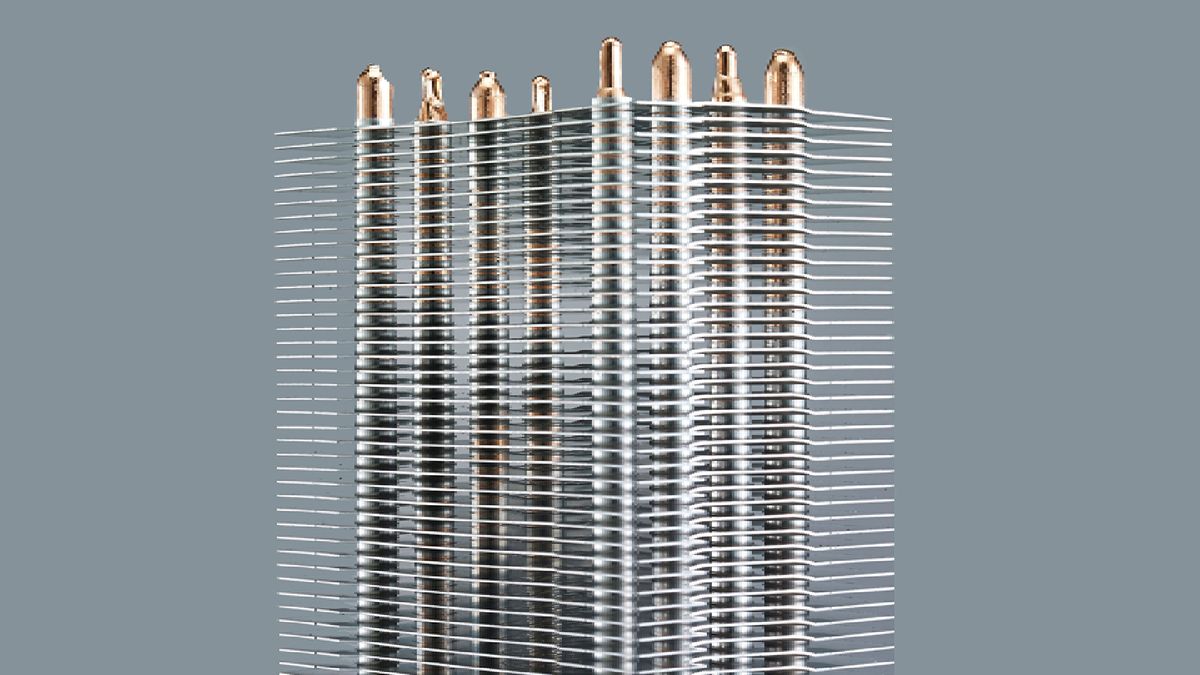

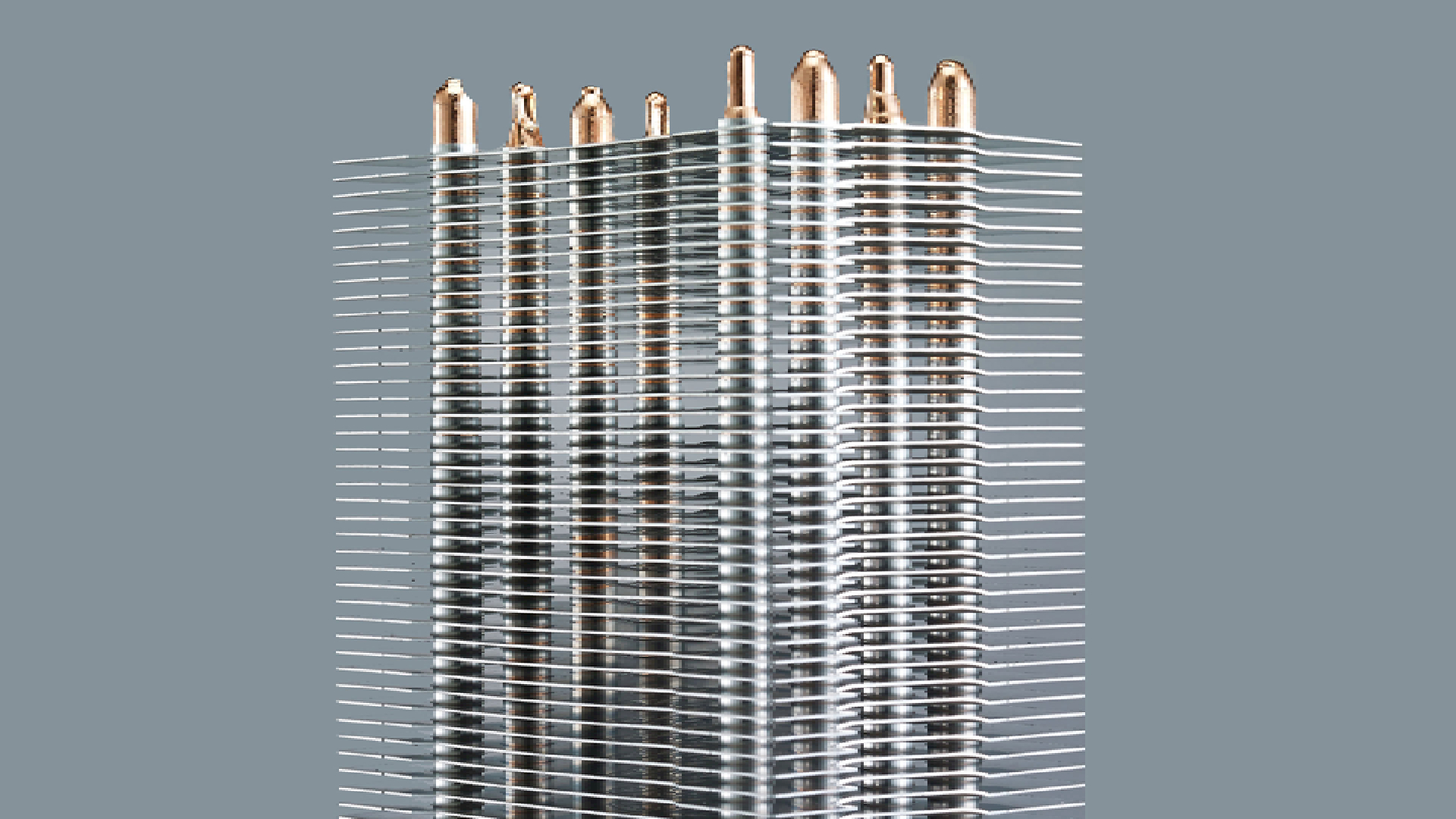

Currently the card uses a huge copper bar as the primary heatsink, with the plan being to mount it horizontally across the front of the board in contact with the die and the VRAM, and use 10 entire CPU heatsinks strapped to the bar itself for extra cooling capacity.

As things stand the configuration here is very much a work in progress, with the redditor appealing for the Nvidia subreddit’s advice as to which components need cooling and how they can improve upon the design, with the proviso that “passive radiation is the point, so let’s please skip the inevitable ‘just use fans’ stuff”.

Fair enough, although for what it’s worth, why don’t you just use some…nevermind.

The thread is worth a read with comments ranging from disbelief to admiration, and with some interesting practical advice in there as well. The top comment from redditor Puzzleheaded-Gas9685 points out that the “massive heat block will be a heat radiator and not a cooler”, and while that may well be true, I’d still like to see the results for myself, if only for the entertainment value of seeing a still-powerful GPU boot with the sort of cooler normally reserved for a Blake’s 7 prop.

Modern GPUs are usually very good at throttling themselves under thermal load to protect vital components, but this may well be something of an extreme test for any significantly heat-producing chip to undergo.

Yes, there’s a real possibility of damaging the card, but it’s probably more likely from bodging the application and dropping the thing on the GPU than anything else.

When it comes to science experiments, we can often learn more from failure than we can from success. It is through experimentation that we all shall benefit, and at the very least, if they haven’t created a great passively cooled GPU they may well have created that most mysterious of things, an actual art.

Or a monstrosity. Whichever, I guess. Looks cool though, don’t it?